For the past two years, I have been applying my engineering background to computational neuroscience research at the Serre Lab, studying how the visual system functions under Prof. Thomas Serre.

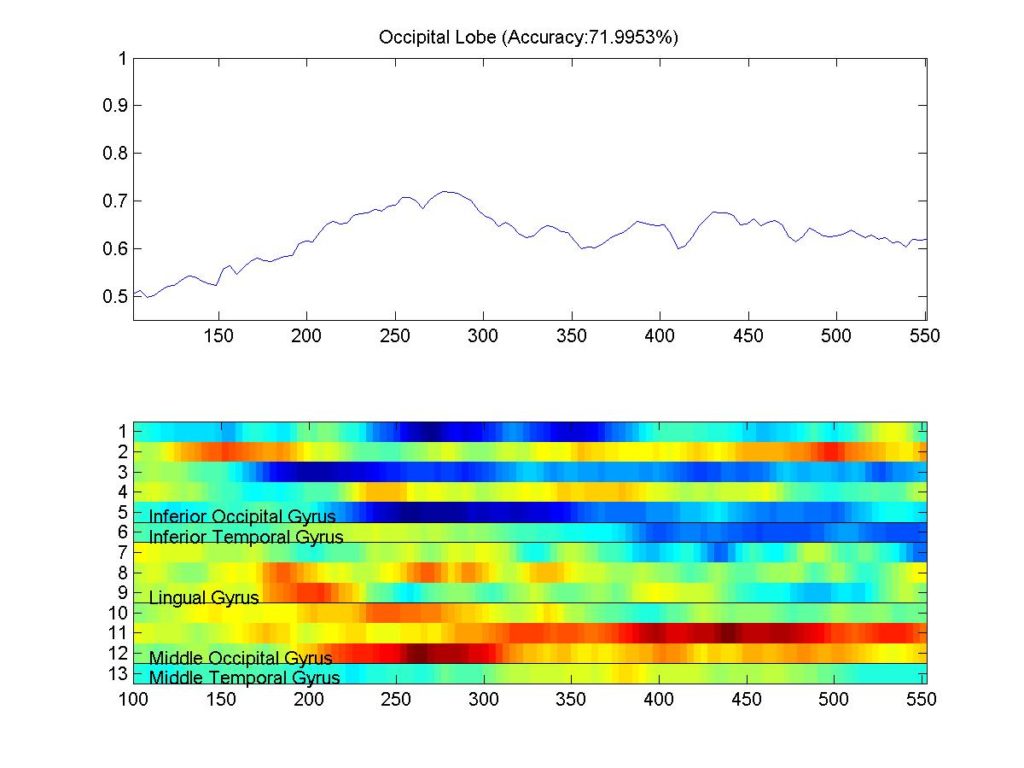

During my first year, I worked on rapid object categorization experiments that investigate how information propagates throughout the brain during object recognition. These experiments relied on advanced multivariate machine learning techniques to decode brain states from electrocorticography (ECoG) and magnetoencephalography (MEG) data from human and non-human primate subjects. From these experiments, I outlined the specific temporal and spatial time courses of visual object recognition along the ventral stream.

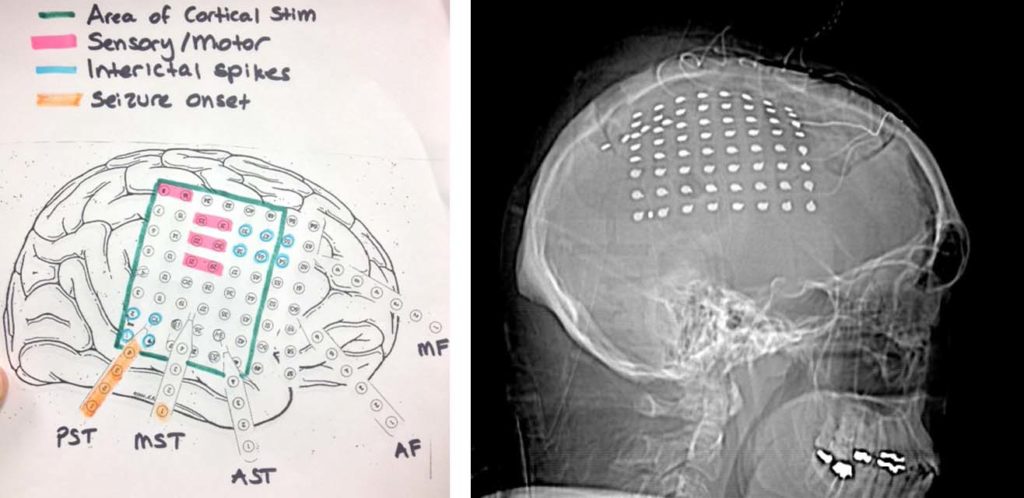

For my senior honors thesis I decided to investigate neural dynamics during natural vision. I used ECoG data collected from epileptic patients at Rhode Island Hospital who underwent surgical treatment for their condition. Besides being one of the first ECoG initiatives in Rhode Island and at Brown, this experiment promised to break new ground by using natural visual stimuli, unlike the majority of previous visual neuroscience experiments.

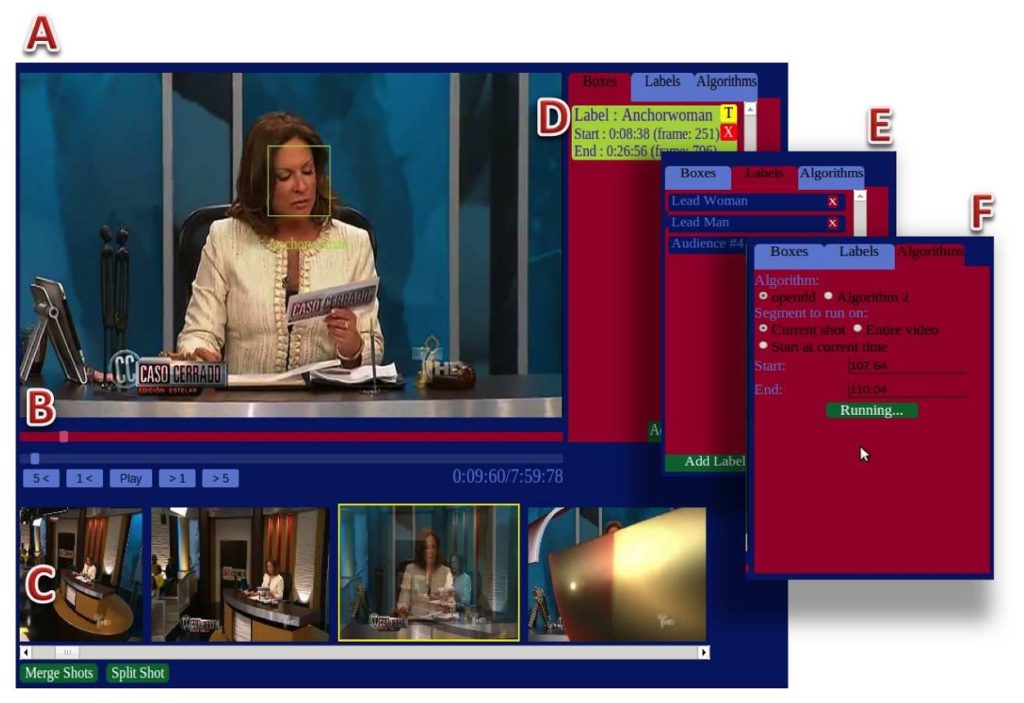

Instead of simplistic, highly constrained stimuli, patients were shown a movie/film of their choosing while we recorded neural and eye tracking data. My experiment aimed to examine visual processing theories such as object recognition and visual scene processing, using realistic stimuli and advanced machine learning analyses. These multivariate machine learning techniques are needed to parse the overwhelming size and complexity of neural data. In addition, computer vision techniques such as face detection and object tracking were used to automatically annotate the videos shown.

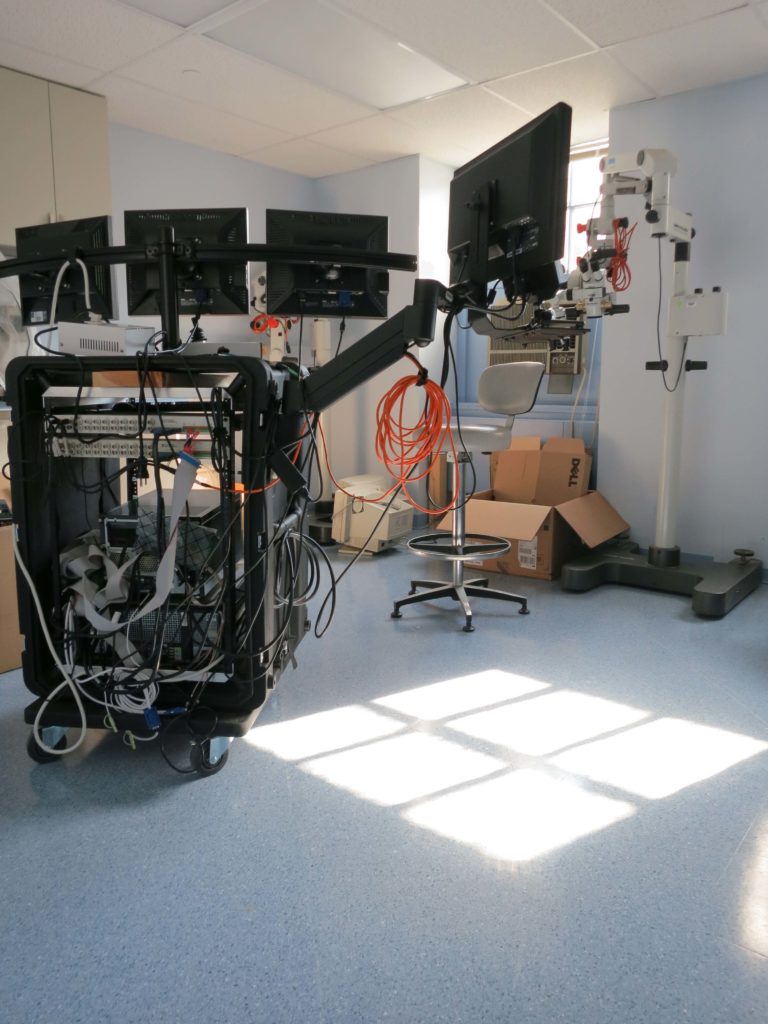

I also helped design and build a mobile experimental system that remotely tracks gaze without head constraints and delivers visual stimuli with high temporal precision. In addition to eye tracking data, the system can record local field potentials (LFPs) from clinical subdural macroelectrodes as well as single unit activity from microelectrode arrays. We used this system to record from two patients and the system is currently being used by all other Brown research teams for multi-unit and ECoG recordings.

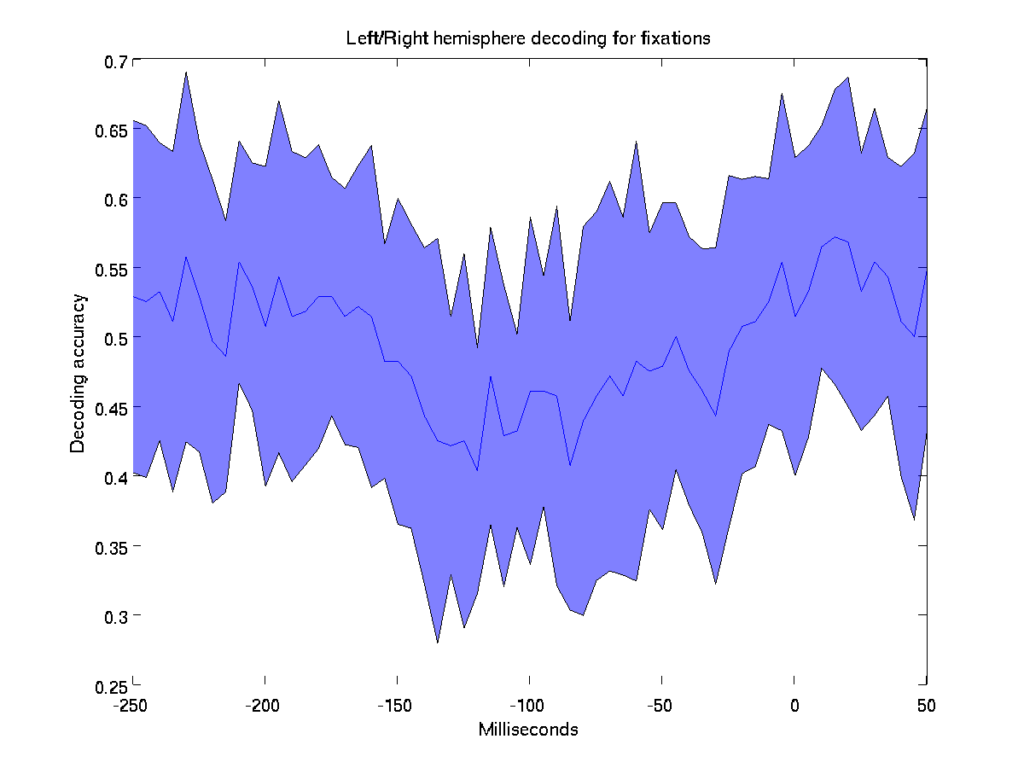

After collection, pre-processing, and synchronizing the data, it came time to do neural decoding analysis to uncover some of the brain mechanisms underlying natural everyday vision. Many analyses were considered to investigate topics such as attentional delay, saccade planning, and human face recognition. However, after months of coding all my results yielded decoding accuracies around chance level. My belief is that the experiment results were compromised by various confounding factors and that perhaps a more controlled experimental paradigm may result better insights.

Despite the poor results, the project taught me a lot about methods in neuroscience research. Thanks to the multidisciplinary nature of this project, I have had the opportunity of collaborating in a meaningful way with neurosurgeons with nation-wide recognition (Rees Cosgrove, Wael Asaad) and prominent computational neuroscience researchers (Thomas Serre, Leigh Hochberg).

You can read the unfinished paper here: Neural dynamics of natural vision (Unfinished due to poor results).